LLM Components

We provide several components for LLMs (Large Language Models) that allow you to easily access and analyze financial data, real-time market data, and even enable AI to place orders.

Yes, you can use our LLM components through the LongPort OpenAPI. Start today!

LLMs Text

The OpenAPI documentation follows the LLMs Text standard, providing llms.txt and Markdown files for each document. Based on this LLMs Text, you can provide AI with a complete dictionary of LongPort OpenAPI documentation as a reference for AI-assisted development, enabling AI to generate more accurate code.

- https://open.longportapp.com/llms.txt - Approximately 2104 tokens.

Each of our documents is also available in Markdown format. When accessing them, simply add the .md suffix to the URL.

For example:

- https://open.longportapp.com/docs/getting-started.md

- https://open.longportapp.com/docs/quote/pull/static.md

Demo

Using in Cursor

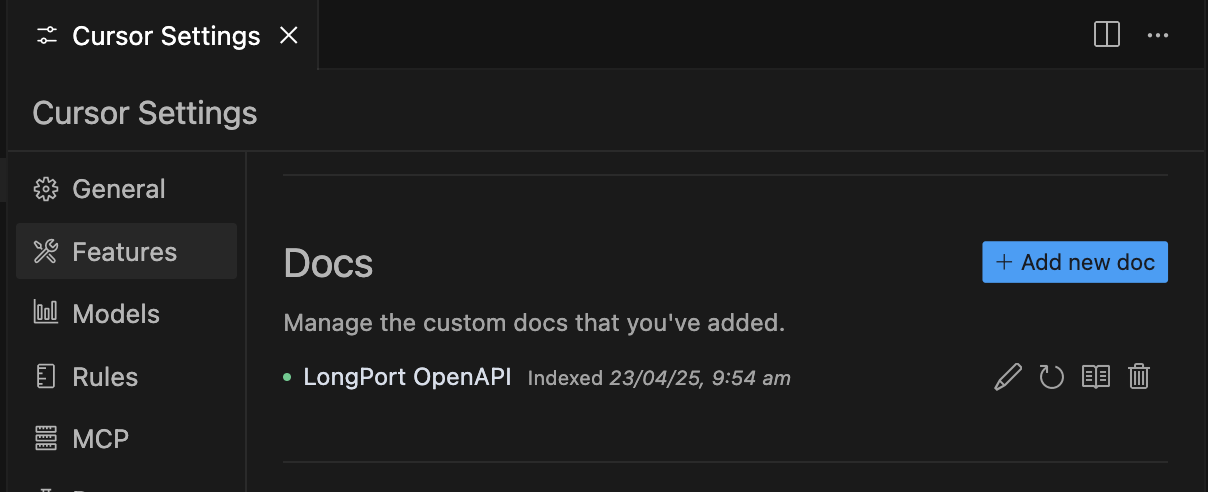

Open Cursor, open the command palette (Command + Shift + P), search for and select Add New Custom Docs and enter the LongPort OpenAPI LLMs Text address in the dialog box:

https://open.longportapp.com/llms.txtOnce added successfully, the Cursor Settings will look like this:

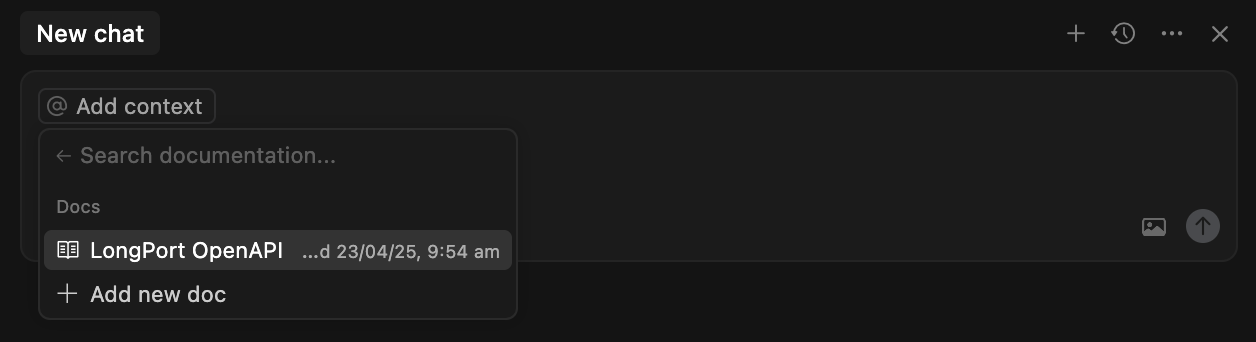

Next, in an AI conversation, you can select the Docs you just added under the docs menu of @Add Context. This allows the AI to use these documents as context in subsequent conversations.

MCP

We are building an MCP implementation for LongPort OpenAPI (based on our SDK), which you can use on any platform that supports MCP.

It is also open-sourced in our GitHub organization.

https://github.com/longportapp/openapi

Installation

Before starting, read the Getting Started guide and obtain your LONGPORT_APP_KEY, LONGPORT_APP_SECRET, and LONGPORT_ACCESS_TOKEN.

macOS or Linux

You can run the following script in the terminal to install directly:

curl -sSL https://raw.githubusercontent.com/longportapp/openapi/refs/heads/main/mcp/install | bashAfter the script finishes, longport-mcp will be installed in the /usr/local/bin/ directory. Run the following command to verify the installation:

longport-mcp -hWindows

Visit https://github.com/longportapp/openapi/releases to download longport-mcp-x86_64-pc-windows-msvc.zip and extract longport-mcp.exe.

Example Prompts

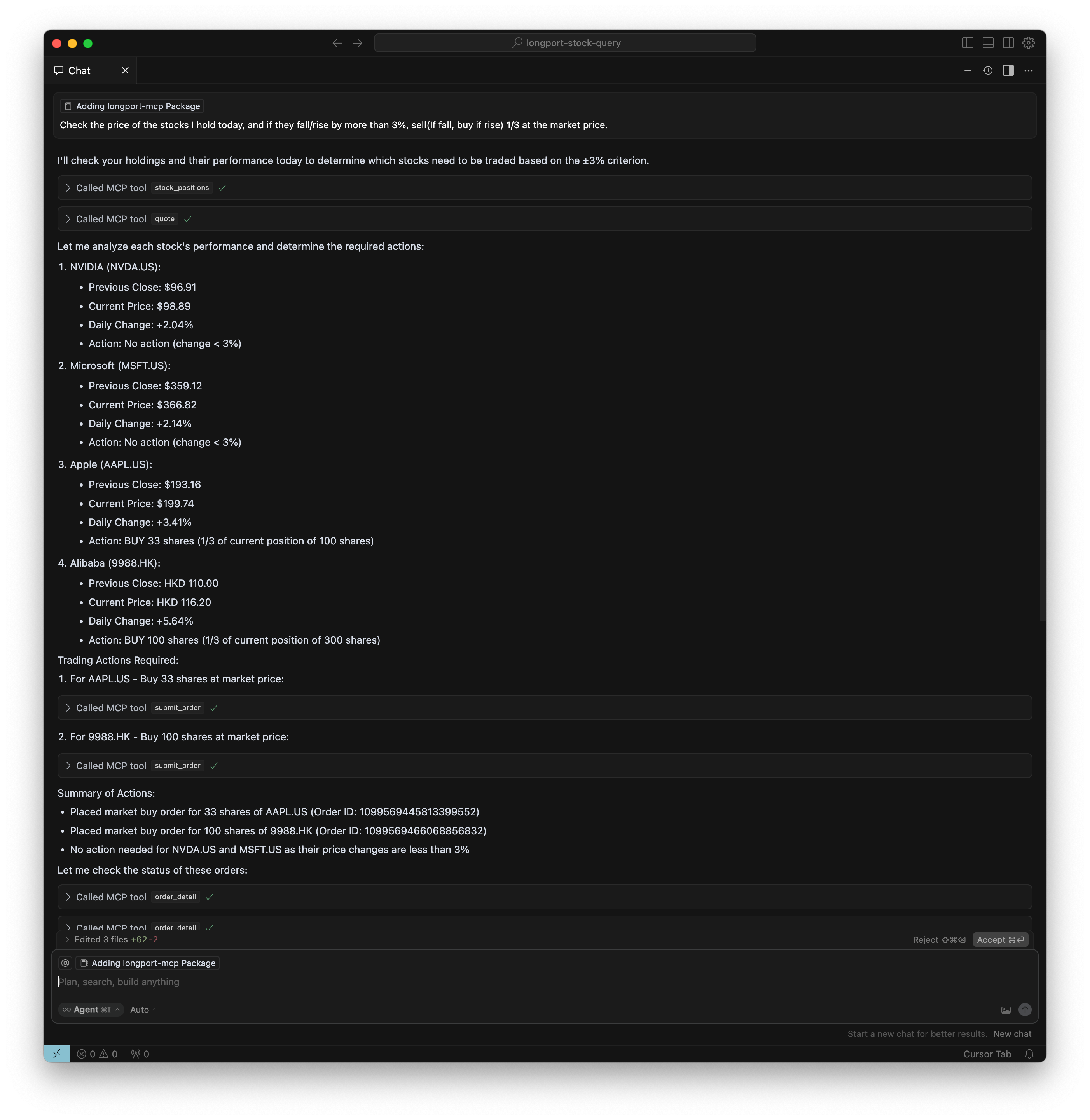

Once you done server setup, and connected, you can talk with AI:

- What's the current price of AAPL and TSLA stock?

- How has Tesla performed over the past month?

- Show me the current values of major market indices.

- What's the stock price history for TSLA, AAPL over the last year?

- Compare the performance of TSLA, AAPL and NVDA over the past 3 months.

- Generate a portfolio performance chart for my holding stocks, and return me with data table and pie chart (Just return result no code).

- Check the price of the stocks I hold today, and if they fall/rise by more than 3%, sell(If fall, buy if rise) 1/3 at the market price.

Using in Cursor

Open the command palette (Command + Shift + P), select Cursor Settings to enter the Cursor Settings interface, and select MCP Servers. Click the Add new global MCP server button.

In the opened mcp.json file, add the following content, replacing your-app-key, your-app-secret, and your-access-token with your actual values:

{

"mcpServers": {

"longport-mcp": {

"command": "/usr/local/bin/longport-mcp",

"env": {

"LONGPORT_APP_KEY": "your-app-key",

"LONGPORT_APP_SECRET": "your-app-secret",

"LONGPORT_ACCESS_TOKEN": "your-access-token"

}

}

}

}Demo:

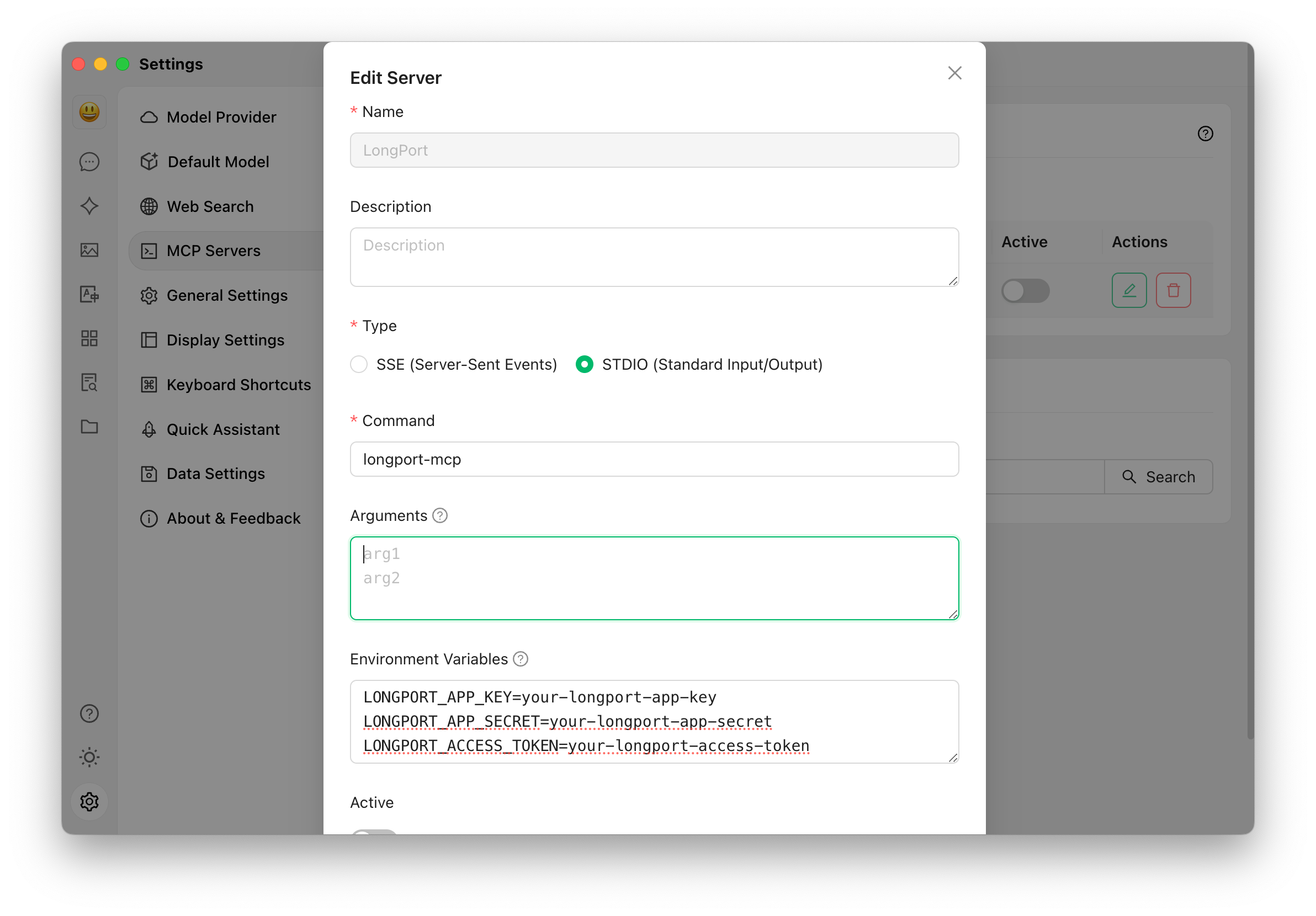

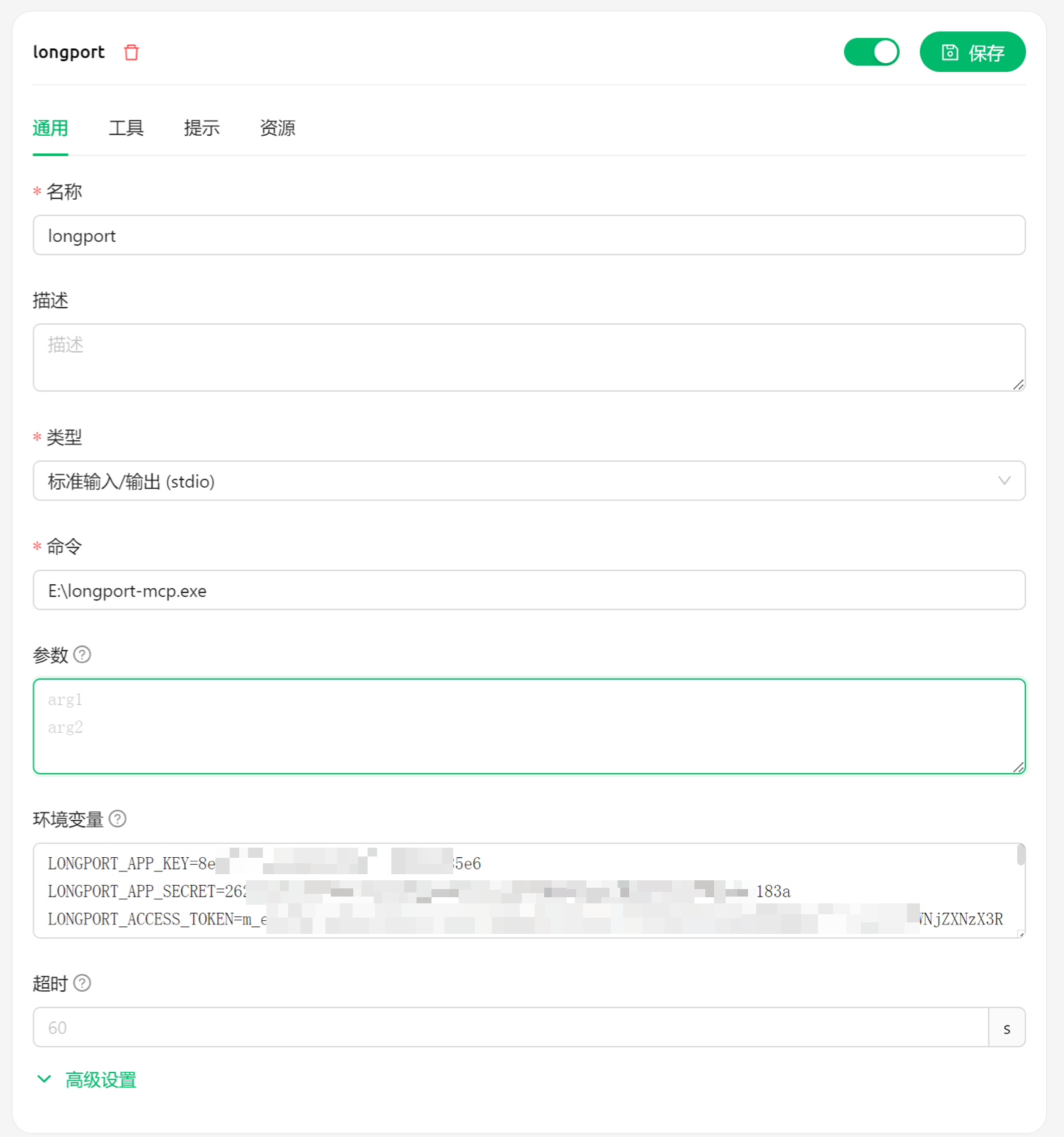

Cherry Studio Configuration

In this section, we will show you how to configure LongPort MCP in your AI chat (screenshots use Cherry Studio).

NOTE: Please make sure your update the Cherry Studio to newest version.

Using STDIO Mode:

Ensure you have configured the environment variables and installed the longport-mcp command-line tool on your system.

For Windows, you can configure like this:

If your in China, you may need to add LONGPORT_REGION=cn into your environment config.

LONGPORT_REGION=cn